Hello everyone, and welcome back to the AI News Articulator. A lot happened in the world of AI this week. The release of OpenAI’s GPT-4o mini was one of the most important events. This week, I talk about some of the most important ideas in artificial intelligence so that you can better understand OpenAI’s latest release and what it means for end users. I also compare it to similar models from Anthropic and Google to give you some background.

How GPT-4O Mini features are different from GPT-4o

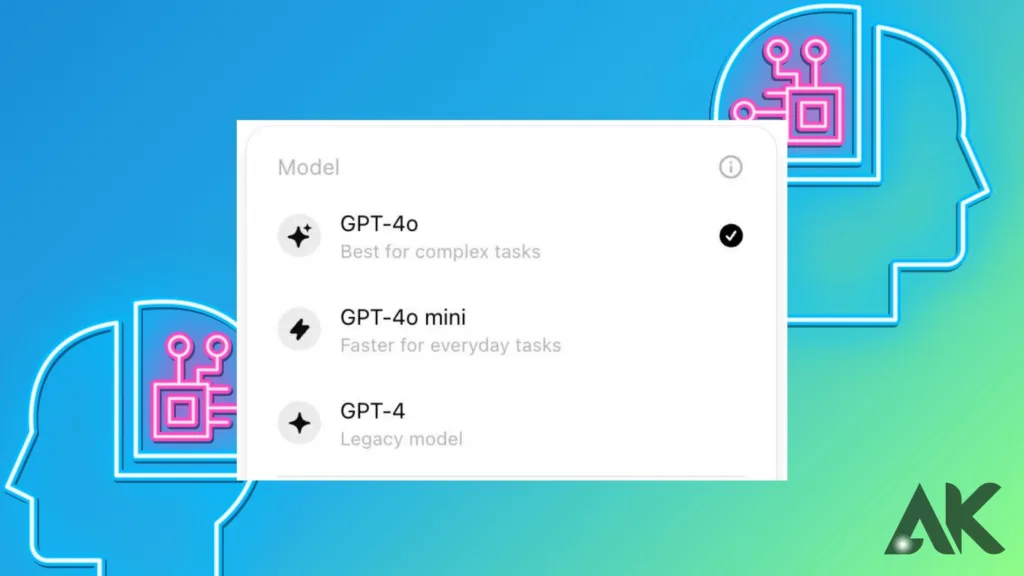

People who use ChatGPT Free can already get to GPT-4O Mini features. That’s OpenAI’s best model. It was announced in early May, right before Google showed off all of its AI innovations at I/O 2024. Free ChatGPT accounts, on the other hand, can’t get to GPT-4o. That’s when GPT-4o mini can help. If you reach your 4o limit, it can be used instead of GPT-4O Mini features.

In a blog post, OpenAI talked about all the cool things the lightweight language model can do. To begin with, it costs 60% less than the GPT-4O Mini features Turbo. Tokens that are put in cost $0.15 and tokens that are taken out cost $0.60. That being said, this information is for developers. For GPT-4O Mini features users, who won’t have to pay to use it, it doesn’t mean anything.

Those are the things that matter. The GPT-4O Mini features has support for both text and vision in the ChatGPT apps and the API. OpenAI says that in the future, multimodal functionality will be better. That will include audio, video, text, and pictures.

Also, the GPT-4O Mini features can handle up to 128K tokens in the context window and up to 16K tokens per request. ChatGPT Free users, on the other hand, get an 8K button context window. You can see up to 32K tokens in a context window if you have ChatGPT Plus or Teams. The full 128K token context window upgrade is given to the Enterprise tier.Lastly, the knowledge cutoff for GPT-4o mini is October 2023, which is a lot later than the GPT-3.5 Turbo’s cutoff date of September 2021.

ChatGPT Free users should also know that they can use GPT-4O Mini features as much as they want, but not GPT-4o. But ChatGPT Free might not be as fast as the paid plans. GPT-4O Mini features blog has a lot of benchmark scores that show GPT-4O Mini features can do better than its direct competitors, Gemini Flash and Claude Haiku. The company says that the GPT-4O Mini features did better than its competitors on reasoning tasks, math and coding skills, and multimodal reasoning.

How to use GPT-4o mini to build AI applications

OpenAI has released the GPT-4O Mini features, which is its “most affordable and intelligent small model” to date. This is a big step toward making AI easier for more people to use.An important change has been made with this release that will allow more developers and apps to use AI technology.

OpenAI says that the GPT-4O Mini features will “extend the range of applications built with AI by making intelligence much more affordable.” With an 82% score on Measuring Massive Multitask Language Understanding (MMLU), GPT-4o is already impressing developers. OpenAI says it “currently outperforms GPT-4O Mini features on chat preferences in LMSYS leaderboard.” Read on to find out more about what the GPT-4o mini can do and how you can use it to help you make AI apps.

Features and capabilities

One of the many things you can do with GPT-4O Mini features is chain or parallelize multiple model calls, like calling multiple APIs. To do this, the GPT-4o mini has to be used for several tasks at once.

Add a lot of context to the model, like the whole code base or the history of conversations. The GPT-4o mini can handle large amounts of data, such as whole codebases or conversation histories. This can help make AI interactions more complex.

Text responses in real time (for example, customer service chatbots) let you talk to customers. Low latency makes it perfect for chatbots that help customers or any other app that needs to send and receive text messages quickly.

Making prototypes and designs

To your surprise, GPT-4O Mini features can help you speed up the process of designing a product.For instance, it can help you come up with new ideas, write documents, and even make user guides. Also, it makes sure that everything is the same, even for big projects with lots of documentation.

Analysis of user feedback

It’s great to get feedback from users, but it can be very hard to sort through all the comments.You can save time and stress by using GPT-4O Mini features to look through a lot of feedback and find trends and ways to make things better.Raw feedback can even give you useful information that can help you make decisions that will improve your product and the experience of the people who use it.

Automated documentation

Writing detailed technical documents and manuals for new chip designs?GPT-4o mini can crank them out for you, saving you tons of time. Plus, it’s multilingual. So, you can create docs in multiple languages with ease.

Error detection and troubleshooting

There’s nothing worse than being sure that there is a mistake in the code but not being able to find it.The good news is that you can teach GPT-40 mini your coding style and projects, and then use its strong performance in coding and reasoning tasks to help engineers find and fix design errors.

Developing intelligence that is cost-effective

OpenAI wants to make intelligence available to as many people as possible. We are introducing the GPT-4o mini today, which is our cheapest small model. We believe that the GPT-4o mini will greatly increase the number of applications that can be made with AI by making it much more affordable. The GPT-4o mini gets 82% on MMLU and is currently better than the GPT-41 on the LMSYS leaderboard when it comes to chat preferences.

It costs 15 cents per million input tokens and 60 cents per million output tokens, which is a lot less than previous frontier models and more than 60% less than GPT-3.5 Turbo.

Due to its low cost and latency, GPT-4o mini can do a lot of different tasks. For example, it can be used in apps that call multiple APIs or models in a chain, pass a lot of information to the models (like the full code base or conversation history), or talk to customers through fast, real-time text responses (like customer service chatbots).

Text and vision are supported by the GPT-4o mini API right now. In the future, support for text, image, video, and audio inputs and outputs will be added. The model knows up to October 2023, has a context window of 128K tokens, and can handle up to 16K output tokens per request. With the better tokenizer that was shared with GPT-4o, it is now even cheaper to deal with text that isn’t written in English.

GPT-4o mini does better than GPT-3.5 Turbo and other small models on academic tests for both textual intelligence and multimodal reasoning. It also works with the same languages as GPT-4o. Additionally, it has better long-context performance than GPT-3.5 Turbo and strong performance in function calling, which lets developers make apps that get data from or interact with external systems.

Reasoning tasks: The GPT-4o mini does better than other small models on tasks that require both text and vision reasoning. It scored 82.0% on MMLU, a test of textual intelligence and reasoning, while Gemini Flash scored 77.9% and Claude Haiku scored 73.8%.

Math and coding skills: The GPT-4o mini does better than other small models on the market at mathematical reasoning and coding tasks. GPT-4o mini got an 87.0% on the MGSM test of math reasoning, while Gemini Flash got a 75.5% and Claude Haiku got a 71.7%. HumanEval, a test of coding skills, gave GPT-4o mini an 87.2% score, while Gemini Flash got a 71.5% score and Claude Haiku got a 75.9% score.

A multimodal reasoning test called MMMU also shows that GPT-4o mini does well on it. It scored 59.4%, compared to 56.1% for Gemini Flash and 50.2% for Claude Haiku.

Safety features built in

Safety is built into our models from the start, and it’s improved at every stage of the process. We filter out things like hate speech, adult content, sites that mostly collect personal information, and spam during pre-training so that our models don’t learn from or show them.

After training, we use methods like reinforcement learning with human feedback (RLHF) to make sure the model’s actions are in line with our rules. This makes the models’ responses more accurate and reliable.The safety features built into GPT-4o mini are the same as those in GPT-4o. We carefully checked them out using both automated and human tests, as required by our Preparedness Framework and our voluntary commitments.

Over 70 outside experts in social psychology, misinformation, and other areas tested GPT-4o to find any possible risks. We’ve taken care of these risks and will share more information about them in the soon-to-be-released GPT-4o system card and Preparedness scorecard. Both the GPT-4o and the GPT-4o mini are safer now thanks to what these expert reviews have shown.Using what they had learned, our teams also worked to make GPT-4o mini safer by using new methods that were based on our research.

The GPT-4o mini in the API is the first model to use our instruction hierarchy method. This makes it harder to jailbreak, inject prompts, or extract system prompts from the model. There is more trust in the model’s answers, and it is safer to use in large-scale applications.As we find new risks, we’ll keep an eye on how the GPT-4o mini is being used and make it safer as needed.

Availability and pricing

You can now get the GPT-4o mini as a text and vision model in the Assistants API, the Chat Completions API, and the Batch API. Developers are charged 15 cents for every 1 million input tokens and 60 cents for every 1 million output tokens, which is about 2500 pages in a standard book.

We are going to fine-tune GPT-4o mini over the next few days. Free, Plus, and Team users of ChatGPT can now access GPT-4o mini instead of GPT-3.5 as of today. Beginning next week, enterprise users will also be able to access. This is part of our mission to make AI benefits available to everyone.

What Will Happen Next?

In the past few years, we’ve seen amazing progress in AI intelligence along with big price drops. For instance, the price of a GPT-4o mini token has dropped by 99% since text-davinci-003, a model that wasn’t as powerful and came out in 2022. We’re committed to keeping going in this direction of lowering costs and making models more useful.

It’s our dream that one day, models will be built right into all apps and websites. Thanks to GPT-4o mini, developers can now make and use powerful AI apps more quickly and less expensively. AI is getting easier to use, more reliable, and more integrated into our everyday digital lives. We’re excited to keep leading the way.

Conclusion

GPT-4O Mini is a powerful tool that is also very affordable, making it useful for a wide range of tasks. Its impressive GPT-4O Mini features, like fast processing, a big context window, and the ability to work with multiple modes, make it a strong contender in the AI field. The GPT-4O Mini was designed to focus on text and vision at first, but it could be expanded to include audio and video. This shows how flexible and future-proof it is.

FAQs

1.What are the core GPT-4O Mini features?

GPT-4O Mini boasts impressive features including efficient processing, a large context window, and multimodal capabilities, allowing it to handle text and vision inputs effectively.

2.How does GPT-4O Mini’s cost compare to other models?

GPT-4O Mini offers a cost-effective solution compared to its predecessors, making advanced AI accessible to a wider range of users and applications.

3.What is the potential for future GPT-4O Mini features?

OpenAI plans to expand GPT-4O Mini features to include audio and video processing, further enhancing its versatility and potential applications.

4.What is the context window size of GPT-4O Mini?

GPT-4O Mini features a generous context window, allowing it to process and understand a larger amount of text compared to previous models.

5.Can GPT-4O Mini handle multiple modalities?

Yes, GPT-4O Mini’s multimodal capabilities enable it to process both text and visual data, expanding its application possibilities.