Introduction

Artificial intelligence (AI) is the power of a digital computer or robot operated by a computer to carry out actions frequently performed by intelligent beings. The phrase is frequently used to refer to initiatives to develop artificial intelligence (AI) systems with cognitive skills comparable to those of people, such as the ability to reason, discern meaning, generalize, and learn from experience. Since the advent of the digital computer in the 1940s, it has been demonstrated that computers can be trained to perform extremely difficult tasks—like discovering proofs for mathematical theorems or playing chess—with amazing proficiency.

With ongoing improvements in computer processing speed and memory, programs still cannot yet match complete human flexibility across a wider range of activities or those requiring a substantial amount of common knowledge. Lots of applications, such as chatbots, voice or handwriting recognition software, computer search engines, and medical diagnosis, use artificial intelligence in this limited sense. On the other hand, some programs have achieved the performance levels of human experts and professionals in carrying out some specific tasks.

What Is Artificial Intelligence (AI)?

Artificial intelligence (AI) is the term used to describe software-coded heuristics that simulate human intellect. These days, this code may be found everywhere from consumer apps to embedded firmware to cloud-based enterprise applications.

Through extensive use of applications of the Generative Pre-Training Transformer, ARTIFICIAL INTELLIGENCE entered the mainstream in 2022. The most often used program is ChatGPT by OpenAI. Due to its widespread fascination, ChatGPT has come to be seen by the majority of customers as being connected with AI. It only represents a small portion of the current uses for AI technology, though.

The best feature of artificial intelligence is the capacity to reason and adopt actions that have the highest probability of achieving a given goal. Machine learning (ML), a subtype of artificial intelligence, is the idea that computer programs can automatically learn from and adapt to new data without human assistance. Deep learning algorithms allow for this autonomous learning by ingesting vast quantities of unstructured data, including text, photos, and video.

How does AI work?

As awareness of AI has increased, businesses have been scrambling to emphasize how it is used in their products and services. Often, what they label as AI is only a component of technology, such as machine learning. For the creation and training of machine learning algorithms, AI requires a foundation of specialized hardware and software. Python, R, Java, C++, and Julia all offer characteristics that are well-liked by AI engineers, yet no one programming language is exclusively associated with AI.

An extensive volume of data known as training data is usually taken in by AI systems, which then examine the data for trends and correlations before employing these patterns to forecast future states. An image recognition tool may learn to identify and describe items in images by studying millions of examples, just as a chatbot can learn to simulate human conversations by studying examples of text. Text, pictures, music, and other media can all be produced realistically using generative AI techniques.

In AI programming, the following cognitive qualities are prioritized:

- Creativity: This area of AI creates creative visuals, writings, melodies, and ideas using neural networks, rules-based systems, statistical techniques, and other AI tools.

- Self-correction: This feature of AI programming is to continuously improve algorithms and make sure they deliver the most precise results.

- Reasoning: This area of AI programming is concerned with selecting the best algorithm to achieve a particular result.

- Learning: The topic of AI programming is concerned with gathering data and formulating the rules necessary to transform it into useful knowledge. The guidelines, also known as algorithms, give computing equipment detailed instructions on how to carry out a certain activity.

Types of Artificial Intelligence

Weak and strong artificial intelligence fall into two different types. Weak artificial intelligence is demonstrated by a system that is built to do a single task. Video games like the chess example from above and personal assistants like Apple‘s Siri and Amazon’s Alexa are examples of weak AI systems. The assistant gives you a statement in response to your query.

Computers with powerful artificial intelligence can do tasks that are thought to be human-like. These systems typically present greater complexity and difficulty. They are made to deal with circumstances in which problem-solving devoid of human involvement may be required. Self-driving cars and operating rooms at medical facilities are two examples of applications for this type of technology.

Deep learning vs. machine learning

Knowing the distinctions between deep learning and machine learning is essential because the terms are occasionally used interchangeably. In addition to being a subfield of artificial intelligence, deep learning is also a subfield of machine learning, as was already mentioned.

Neural networks are the real pillars of deep learning. The word “deep” defines the term “deep learning algorithm” as a neural network with more than three layers, including the inputs and outputs. A general illustration of this is shown in the illustration that follows.

The method by which each algorithm learns is where deep learning and machine learning diverge. Deep learning allows for the use of larger data sets by drastically reducing the amount of manual human engagement required during the feature extraction stage of the process. cited Lex Fridman pointed out in the same MIT presentation cited above, “scalable machine learning” is what deep learning is. Machine learning that is “non-deep” or conventional is more dependent on user input. Human experts build a hierarchy of characteristics to understand the differences between various data inputs, usually by learning from more structured data.

Although “deep” machine learning methods can use datasets with labels, commonly referred to as supervised learning, to guide its algorithm, it is not necessary. It can automatically discover the hierarchy of features that separate distinct types of data from one another and ingest unstructured material in its raw form, such as text and photos. We can expand machine learning in more exciting ways than traditional methods, which rely on human interaction to handle data.

Strong AI vs. weak AI

AI can be classified as powerful or weak.

- The strongest artificial intelligence, often referred to as artificial general intelligence (AGI), is a term used to describe computer programming that can mimic human cognitive functions. A clever AI system can employ fuzzy logic to transmit information from one area to another and automatically come up with a solution when given an unexpected job. Theoretically, a powerful AI computer ought to be able to defeat both the Chinese Room defense and the Turing test.

- AI that is weak, sometimes referred to as narrow AI, is created and trained to carry out a particular task. Weak AI is used by industrial robots and virtual personal assistants like Apple’s Siri.

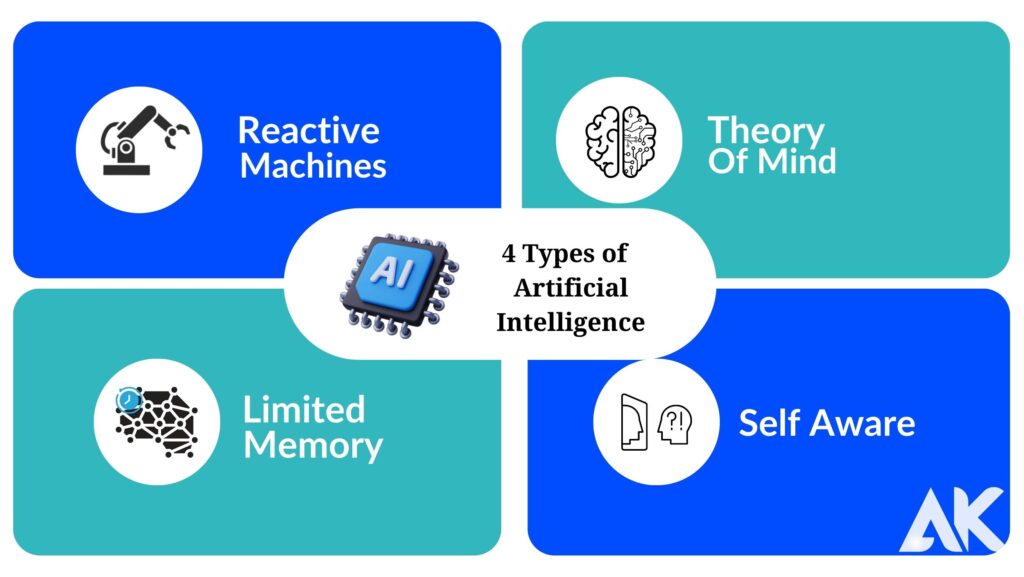

What are the 4 types of artificial intelligence?

According to Arend Hintze, an assistant professor of integrative biology, computer science, and engineering at Michigan State University, there are four different categories of artificial intelligence (AI). Starting with the widely used task-specific intelligent systems of today, these categories progress to the still speculative sentient systems. The categories are as follows.

- These AI systems are reactive, memoryless, and task-specific machines. An example is the IBM chess program called Deep Blue, which in the 1990s defeated Garry Kasparov. Deep Blue can recognize the pieces on a chessboard and anticipate outcomes, but it lacks memory, making it unable to draw on the past to make predictions about the future.

- small memory: These AI systems contain memories, allowing them to draw on the past to guide present actions. Some of the decision-making techniques used by self-driving cars are designed in this manner.

- concept of mind: Theory of mind is a term used in psychology. It implies that AI would be socially aware enough to understand emotions when applied to technology. This kind of AI will be able to forecast behavior and deduce human intentions, which is a capability required for artificial intelligence (AI) systems to develop into essential members of human teams.

- In this category, artificial intelligence systems are conscious because they are aware of who they are. Machines with self-awareness are aware of their own conditions.

There is currently no such AI.

How Is AI Used in Healthcare?

AI is applied in healthcare settings to support diagnostics. A more accurate diagnosis of a patient can be made based on their symptoms and vital signs because to AI’s proficiency at spotting minute scan irregularities. AI is also used to track and record medical data, manage insurance claims, and classify patients. Virtual nurses and doctors, robotic surgery with AI help, and other technology advancements are anticipated.

What are the applications of AI?

A wide range of markets have adopted artificial intelligence. Here are 10 examples.

Healthcare AI: The biggest wagers are placed on improving health outcomes and cutting costs. Businesses are using machine learning to diagnose ailments more quickly and precisely than people. It can answer queries and comprehends common language. The system creates a hypothesis using patient data and other available data sources, which it then displays with a confidence grading scheme for. Another application of AI is the use of chatbots and virtual health assistants online to aid patients and other consumers of the healthcare business with administrative tasks including scheduling appointments, understanding billing, and finding medical information. Pandemics like COVID-19 are being anticipated, addressed, and understood using a variety of AI technologies.

artificial intelligence in business: In order to find out how to better serve clients, machine learning algorithms are being included into analytics and customer relationship management (CRM) platforms. Chatbots have been incorporated into websites to give users immediate assistance. It is anticipated that the rapid development of generative AI technologies, such as ChatGPT, would have far-reaching effects, including the abolition of jobs, a revolution in product design, and a disruption of business models.

artificial intelligence (AI) in the classroom: Grading can be automated by AI, freeing up educators’ time for other duties. Students can be evaluated and their needs can be met, allowing them to work at their own pace. AI tutors can give students extra support to help them stay on track. Technology use may also affect where and how students learn, maybe even replacing some professors. As demonstrated by ChatGPT, Bard, and other big language models, generated AI can help teachers create lesson plans and other instructional materials and engage students in novel ways. Instructors are forced to reevaluate student assignments, exams, and plagiarism policies as a result of the advent of these technologies.

artificial intelligence (AI) in finance: Financial institutions are being disrupted by artificial intelligence (AI) in personal finance software like Intuit Mint or TurboTax. Applications like this gather personal information and offer financial guidance. Other technology, including IBM Watson, have been deployed in the home buying process. Today, artificial intelligence software handles a sizable chunk of Wall Street trading.

artificial intelligence (AI) in law: Sifting through documents during the discovery stage of a legal case may be quite stressful for people. Artificial intelligence is being utilized to improve client service and speed up labor-intensive legal sector activities. Law companies utilize computer vision to identify and extract information from documents, machine learning to characterize data and forecast results, and NLP to interpret information request.

AI in media and entertainment: Targeted advertising, content recommendations, distribution, fraud detection, script creation, and movie production are all areas where the entertainment industry makes use of AI. Newsrooms can simplify media workflows by using automated journalism to save time, money, and complexity. AI is used in newsrooms to automate repetitive chores like data input and proofreading, as well as to conduct topic research and provide assistance with headlines.

AI in IT and software development. It is possible to generate application code using new generative AI tools based on natural language prompts, however it is still early for these tools and doubtful that they will soon replace software engineers. Numerous IT tasks, including data entry, fraud detection, customer support, predictive maintenance, and security, are being automated by AI.

Security AI: Consumers should proceed cautiously since AI and machine learning are at the top of the list of buzzwords security providers use to advertise their solutions. Nevertheless, a number of cybersecurity-related tasks, such as anomaly detection, addressing the false-positive issue, and undertaking behavioral threat analytics, are being successfully carried out using AI approaches. Machine learning is used by businesses in domains like security information and event management (SIEM) software to find abnormalities and spot unusual behavior that could be a sign of dangers. By examining data and using logic to find parallels to known hazardous code, AI can alert to new and developing attacks much earlier than human employees and previous technological iterations.

manufacturing with AI: The manufacturing sector was a pioneer in integrating robots. Cobots, which are smaller, multitasking robots that work alongside humans and assume more responsibility for the job in warehouses, factories, and other workspaces, are an example of industrial robots that were originally isolated from human employees and programmed to complete certain jobs.

Banking with AI: Chatbots are being successfully used by banks to handle transactions that don’t need human interaction and to inform clients of services and opportunities. Artificial intelligence (AI) virtual assistants are used to simplify and reduce the cost of adhering to banking requirements. AI is used by banking firms to enhance loan decision-making, set credit limits, and find investment opportunities.

AI in the transport sector: Along with being essential to the operation of autonomous vehicles, AI technologies are utilized in the transportation sector to manage traffic, predict airline delays, increase the efficiency and safety of maritime commerce, and manage traffic. For demand forecasting and supply chain disruption detection, AI is replacing conventional approaches. COVID-19, which astonished many businesses with its implications on the supply and demand of commodities globally, intensified this tendency.

AI methods and goals

Symbolic methods versus connectionist methods

In AI research, there are two different and occasionally at odds schools of thought: symbolic (or “top-down”) and connectionist (or “bottom-up”). By examining cognition in terms of the processing of symbols—hence the symbolic label—independent of the biological structure of the brain, the top-down method aims to reproduce intelligence. On the other hand, the bottom-up approach comprises creating artificial neural networks that closely resemble how the brain is structured, hence the label “connectionist.”

Consider the challenge of developing a system with an optical scanner that can recognize the alphabet to show the variation across these methods. A bottom-up approach is frequently used to train artificial neural networks, which are fed letters one at a time, gradually improving performance by “tuning” the network. (Tuning modifies how receptive various brain pathways are to various stimuli.) A top-down strategy, on the other hand, often entails creating a computer program that evaluates each letter against geometric descriptions. Simply put, the bottom-up approach is based on brain operations, whereas the top-down approach is based on symbolic descriptions.

Edward Thorndike, a psychologist at Columbia University in New York City, made the inaugural assertion that human learning is made up of some as-yet-unidentified quality of connections between neurons in the brain in The Fundamentals of Learning (1932). Donald Hebb, a psychologist at McGill University in Montreal, Canada, asserted in The Organization of Behavior (1949) that learning necessitates strengthening particular brain activity patterns by raising the probability (weight) of induced neuron firing between the connected connections. After that, in the Connectionism section, weighted linkages are discussed.

Herbert Simon, a psychologist and computer scientist at Carnegie Mellon University in Pittsburgh, Pennsylvania, and Allen Newell, a researcher at the RAND Corporation in Santa Monica, California, summarized the top-down strategy in 1957. The physical symbol system theory was the name given to it. According to this perspective, examining symbol systems theoretically allows for the creation of artificial intelligence in computers, and equivalent symbolic manipulations also result in the production of human intelligence.

However, bottom-up AI was ignored during the 1970s, and it wasn’t until the 1980s that this strategy once more gained popularity. Both strategies are used today, and both are considered to have drawbacks. While bottom-up researchers have been unable to duplicate the neural systems of even the most basic living organisms, symbolic techniques often fail when applied to the real world. There are about 300 neurons in the freshly researched worm Caenorhabditis elegans, and their pattern of connectivity is completely understood. However, not even this worm can be reproduced by connectionist algorithms. Evidently, the connectionist theories representations given neurons are vast oversimplifications of the truth.

What is the history of AI?

Since the beginning of time, people have thought about giving intelligence to inanimate objects. According to traditions, the Greek deity Hephaestus made robot-like servants out of gold. Engineers created god sculptures that priests could animate in ancient Egypt. René Descartes, Thomas Bayes, Ramon Lull, a 13th-century Spanish cleric, and Aristotle all described human brain processes as symbols, laying the groundwork for concepts in artificial intelligence such as general knowledge representation.

The latter half of the 19th and the beginning of the 20th centuries laid the foundation for the modern computer. The earliest blueprint for a programmable machine was created in 1836 by Augusta Ada King, Countess of Lovelace and mathematician Charles Babbage of Cambridge University.

The stored-program computer, which stores both the programs and the data it processes in memory, was developed in the 1940s by Princeton mathematician John Von Neumann. Additionally, the foundation for neural networks was created by Walter Pitts and Warren McCulloch.

The 1950s. Modern technologies allowed scientists to test their theories on artificial intelligence. The British mathematician and World War II codebreaker Alan Turing created one test to determine if a computer is intelligent. In the Turing test, a computer’s capacity to deceive a questioner into thinking a person came up with the answers was measured.

The 1956s. The summer meeting at Dartmouth College this year is usually regarded as the birth of the modern field of artificial intelligence. Marvin Minsky, Oliver Selfridge, and John McCarthy, who is credited with coining the term artificial intelligence, were among the 10 notable individuals that attended the seminar, which was organized by the Defense Advanced Research Projects Agency (DARPA). Herbert A. Simon, an economist, political scientist, and cognitive psychologist, as well as computer scientist Allen Newell, were also there. The two showed off Logic Theorist, a program that can prove particular mathematical theorems and is usually regarded as the first example of artificial intelligence (AI).

The 1960s and the 1950s. Founders in the emerging field of artificial intelligence predicted that a computer intelligence similar to the human brain was imminent after the Dartmouth College conference, attracting major government and industrial investment. Indeed, after more than 20 years of well-funded basic research, significant advances in AI were made: For instance, Newell and Simon published the General Problem Solver (GPS) algorithm in the late 1950s, and McCarthy developed Lisp, an AI programming language that is still in use today. Despite the GPS algorithm’s inability to handle complicated issues, it helped set the stage for the development of more sophisticated cognitive architectures.

The ’70s and ’80s. The complexity of the issue and the limitations of computer memory and processing made the development of artificial general intelligence challenging but not imminent. The first “AI Winter,” which lasted from 1974 to 1980, was sparked by the cessation of financing for AI research from the government and business. Thanks to deep learning research and the industry’s adoption of Edward Feigenbaum’s expert systems, there was a second wave of enthusiasm for AI in the 1980s. However, this time, government funding and corporate support collapsed. There was a second AI winter up until the middle of the 1990s.

The ’70s and ’80s. The complexity of the issue and the limitations of computer memory and processing made the development of artificial general intelligence challenging but not imminent. The first “AI Winter,” which lasted from 1974 to 1980, was sparked by the cessation of financing for AI research from the government and business. Thanks to deep learning research and the industry’s adoption of Edward Feigenbaum’s expert systems, there was a second wave of enthusiasm for AI in the 1980s. However, this time, government funding and corporate support collapsed. There was a second AI winter up until the middle of the 1990s.

The 2000s. Additional advancements in speech recognition, computer vision, deep learning, and machine learning resulted in the development of products and services that have impacted how we live today. These include the launches of the Amazon recommendation engine in 2001 and the Google search engine in 2000. Microsoft introduced its speech recognition system, Netflix developed its movie recommendation system, and Facebook unveiled its facial recognition technology. Google debuted Waymo, its self-driving project, while IBM introduced Watson.

Conclusion

Artificial intelligence (AI) is the power of a digital computer or robot operated by a computer to carry out actions frequently performed by intelligent beings. AI systems possess human-like cognitive abilities like reasoning, meaning-finding, generalization, and experience-based learning. Since the development of the digital computer in the 1940s, computers can be programmed to perform extremely complicated tasks with remarkable proficiency. However, with ongoing improvements in computer processing speed and memory, programs still cannot yet match complete human flexibility across a wider range of activities or those requiring a substantial amount of common knowledge.

The phrase “artificial intelligence” (AI) refers to software-coded heuristics that mimic human intelligence. From consumer apps to embedded firmware to cloud-based enterprise systems, this code may be found everywhere. ChatGPT by OpenAI is the application that is utilized the most. Due to its widespread fascination, ChatGPT has come to be seen by the majority of customers as being connected with AI. It only represents a small portion of the current uses for AI technology, though.

The ideal quality of artificial intelligence is the ability to reason and take actions that have the best likelihood of reaching a certain objective. Machine learning (ML), a subtype of AI, is the idea that computer programs can automatically learn from and adapt to new data without human assistance. Deep learning algorithms allow for this autonomous learning by ingesting vast quantities of unstructured data, including text, photos, and video.

As interest in AI has increased, businesses have been scrambling to emphasize how it is used in their products and services. For the creation and training of machine learning algorithms, AI requires a foundation of specialized hardware and software. Although AI engineers choose Python, R, Java, C++, and Julia, no particular programming language is solely related to AI. AI systems typically take in a vast amount of training data, analyze it for trends and correlations, and then use these patterns to predict future states. Text, pictures, music, and other media can all be produced realistically using generative AI techniques.

Artificial intelligence can be divided into two categories: weak AI, which is represented by systems created to complete a specific task, and powerful AI, which is represented by systems created to handle situations in which problem-solving may be required without the assistance of a person. Applications for this kind of technology include self-driving cars and operating rooms in hospitals.

Although deep learning and machine learning are sometimes used interchangeably, their approaches are different. Deep learning allows for the use of larger data sets by drastically reducing the amount of manual human engagement required during the feature extraction stage of the process. In order to identify the differences between various data inputs, traditional or “non-deep” machine learning relies heavily on human input. Although supervised learning, or datasets containing labels, is a popular practice in deep machine learning, it is not obligatory. Deep machine learning can take unstructured data, like text and images, and automatically identify the hierarchy of characteristics that distinguish different forms of data from one another.

FAQ

Who is the father of AI?

John McCarthy is recognized as the invention’s progenitor. John McCarthy, a computer scientist, is American. It was he who coined the phrase “artificial intelligence.” He is one of the pioneers of artificial intelligence, along with Alan Turing, Marvin Minsky, Allen Newell, and Herbert A. Turing.

Who is the first CEO of AI?

The first ai ceo humanoid in the world, dictador’s mika, made a strong appearance at the salz21-home of innovation conference.

Who is the first AI in the world?

Christopher Strachey’s checkers-playing program and Dietrich Prinz’s chess-playing program were the first functional AI programs created in 1951 for the University of Manchester’s Ferranti Mark 1 computer.

Is Siri artificial intelligence?

Yes. Applications with artificial intelligence power include Alexa and Siri. They rely on machine learning and natural language processing, two branches of AI, to enhance performance over time. Alexa is a voice-activated technology from Amazon that works with the Echo speaker that hears spoken commands.

What are the 6 laws of AI?

In this post. Accountability, inclusiveness, reliability and safety, fairness, transparency, and privacy and security are six of Microsoft’s guiding principles for responsible AI.