OpenAI released the GPT-4o mini on June 18, 2024. It was a huge step forward in cost-effective artificial intelligence. This new model is meant to make advanced AI more accessible to more people by making deployment much cheaper while still keeping high performance and flexibility. This blog post will discuss the most important things about the GPT-4O Mini API integration, such as its features, benefits, price, and how to use it.

What is GPT-4o mini?

OpenAI created the cutting-edge artificial intelligence model GPT-4o mini, which is meant to provide strong performance at a much lower cost than previous models. It’s part of OpenAI’s larger plan to make smart AI easier and cheaper.

For more details, visit the OpenAI’s official website.

What’s New about GPT-4o mini API?

OpenAI has released the GPT-4o mini, which is said to be their most cost-effective small model to date. This makes advanced AI skills easier to get and less expensive than ever. Here are the most important new things about GPT-4o mini:

1. Unprecedented Cost Efficiency:

The GPT-4o mini is priced very competitively at 15 cents per million input tokens and 60 cents per million output tokens. This makes it much cheaper than older models and over 60% less expensive than the GPT-3.5 Turbo.

2. Superior Performance Metrics:

- Textual Intelligence: The GPT-4o mini gets an 82% on the MMLU benchmark, which is better than earlier models.

- Reasoning and Coding Skills: It does very well on jobs that require it to use strong mathematical reasoning and code, getting scores of 87.0% on the MGSM benchmark for math reasoning and 87.2% on HumanEval for coding performance.

3. Versatility in Task Handling:

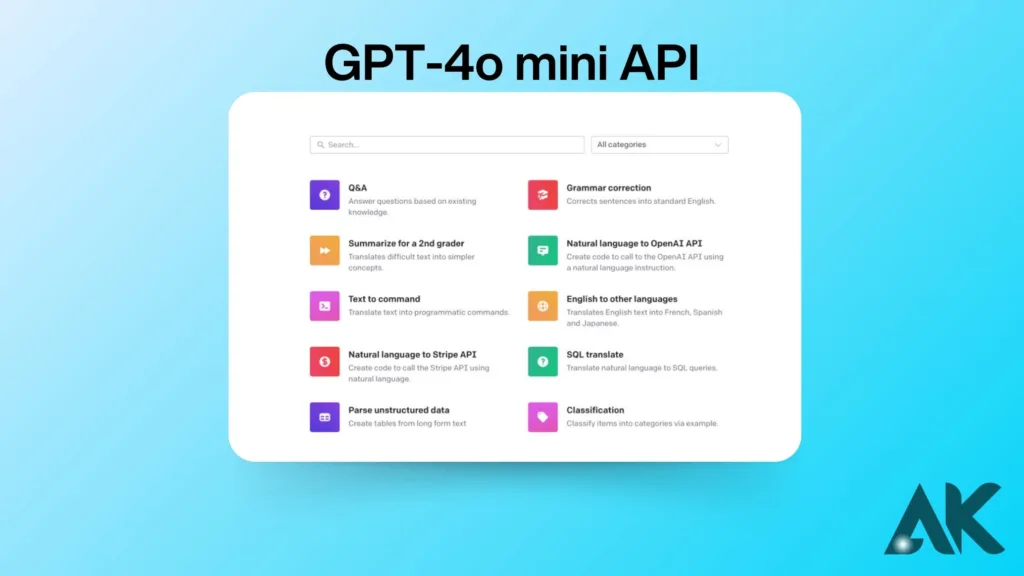

The model can easily handle a wide range of tasks, such as connecting multiple model calls, dealing with large amounts of context, and sending quick text responses in real time for customer interactions.

4. Multimodal Support:

The GPT-4o mini can currently take both text and vision data through its API. In the future, it will be able to take in and send out text, images, videos, and sounds.

5. Extended Context Window:

The GPT-4o mini is great for jobs that need a lot of data input because it has a context window with 128K tokens and can handle up to 16K output tokens per request.

6. Enhanced Non-English Text Handling:

The model can now handle non-English text more cheaply thanks to a better tokenizer that was shared with GPT-4o.

7. Advanced Safety Measures:

- Built-In Safety: The model has strong safety features from the pre-training phase to the post-training alignments. It uses reinforcement learning with human feedback (RLHF) to make sure that answers are reliable and correct.

- New ways to stay safe: The GPT-4o mini is the first model to use OpenAI’s instruction hierarchy method. This makes it safer for large-scale apps by making it more resistant to jailbreaks, prompt injections, and system prompt extractions.

8. Proven Practical Applications:

Trusted partners like Ramp and Superhuman have already tried GPT-4o mini and found that it works much better than GPT-3.5 Turbo at useful tasks like getting structured data and writing good emails.

9. Immediate Availability

The Assistants API, the Chat Completions API, and the Batch API all let you get the GPT-4o mini. Starting today, ChatGPT Free, Plus, and Team users can access it. Next week, Enterprise users will be able to do the same.

10. Reduction in AI Costs:

The price of a GPT-4o mini token has dropped by an amazing 99% since text-davinci-003 came out in 2022. This shows that OpenAI is serious about lowering costs while improving model capabilities.

Where can the GPT-4o mini API be accessed?

Several OpenAI API routes can be used to get to the GPT-4o mini API:

GPT-4o mini can also be used in ChatGPT. Free, Plus, and Team users can start using it today (June 18, 2024), and Enterprise users will be able to do so next week.

GPT-4o vs GPT-4O mini: What are the Difference?

1. Model Size and Cost

- GPT-4o: This is a full-sized, powerful model made to handle a lot of different types of jobs. Of course, it requires more computing power and costs more.

- GPT-4o mini: A smaller, lighter form that is much more energy efficient. Some of the same features are available for a lot less money, so more people can use it.

2. Performance and Speed

- GPT-4o: Because it has a bigger architecture, GPT-4o is very good at handling complicated jobs that use a lot of resources. It is the model that is used for jobs that need the most AI power.

- GPT-4o mini: The GPT-4o mini is smaller and less expensive than the GPT-3.5 Turbo, but it is still more accurate. Because it’s made to work quickly, it can be used for real-time apps.

3. Current API Capabilities

- Both Models: The API currently lets you enter text and images and get text back.

- Future Support: For the GPT-4o mini, more modes, such as audio, will be added. This will keep both models at the cutting edge of AI technology.

4. Application Versatility

- GPT-4o: It works best for AI applications that need to handle multimodal data without any problems. This is perfect for situations with a lot at stake where every little thing counts.

- GPT-4o mini: Perfect for many uses, especially when saving money and getting things up and running quickly are important. It is a great option for implementing AI-based solutions in many fields.

5. Practical Use Cases

- GPT-4o: Because it can do so many things, GPT-4o is best for situations that need to handle a lot of data, make complex decisions, and interact with others in many ways.

- GPT-4o mini: It can do some of the same things, but the GPT-4o mini is better when speed and low cost are important, like when you need real-time customer service or want to analyze data more quickly.

GPT-4o mini Pricing

The GPT-4o mini is meant to be a cheap AI model that lets a lot of people use real-time artificial intelligence. Here are the prices for the GPT-4o mini:

The GPT-4o mini is meant to be a cheap AI model that lets a lot of people use real-time artificial intelligence. Here are the prices for the GPT-4o mini:

As for entry tokens, each million of them costs fifteen cents ($0.15).

You will be charged 60 cents ($0.60) for every million output tokens.

With this pricing system, the GPT-4o mini is a lot less expensive than earlier models. One example is that it is over 60% less expensive than the GPT-3.5 Turbo and a million times cheaper than any other frontier model.

In order to understand:

- The text you send to the model to be processed is stored in input tokens.

- The text that the model makes as an answer is stored in output tokens.

Click GPT-4o mini Pricing to get more information.

Cost Comparison

- GPT-3.5 Turbo: The GPT-4o mini costs more than 60% less than the GPT-3.5 Turbo.

- Other Frontier Models: The GPT-4o mini is a thousand times cheaper than other high-end AI models.

Practical Example

For a normal use, this is how you could figure out the cost:

- Question: If you send a question of 1,000 words (about 1,500 tokens) and get a reply of 500 words (about 750 tokens), how much would it cost?

- You need to enter: (1,500 tokens times 15 cents divided by 1,000,000 tokens) = $0.0000225

- The result is (750 tokens times 1/60 cents) times 1,000,000 tokens, which equals $0.000045.

- Total cost of the query: $0.0000675

This low price shows that the GPT-4o mini can process large amounts of data quickly and efficiently at a fraction of the cost of older models. This makes it very flexible and useful for many different situations.

Conclusion

By adding the GPT-4O Mini API to your game, you can make it much more useful. With GPT-4O Mini API integration, your users will be able to interact with your app more easily and get smarter answers. Adding advanced AI features to your app is made easier with this powerful tool, which makes sure the integration goes smoothly and quickly. By using GPT-4O Mini API integration, your app will not only stand out in a crowded market, but it will also give users a smooth and interesting experience. Take your app to the next level by adding this cutting edge technology.

FAQS

Q1. What is GPT-4O Mini API integration?

A. GPT-4O Mini API integration involves incorporating the GPT-4O Mini API into your application to leverage its advanced AI capabilities. This enables your app to provide smarter, more intuitive interactions by utilizing the powerful natural language processing features of the GPT-4O Mini.

Q2. How does GPT-4O Mini API integration benefit my app?

A. Integrating GPT-4O Mini API into your app enhances user interactions with intelligent, context-aware responses. It improves the overall user experience by making your app more responsive and engaging, ultimately increasing user satisfaction and retention.

Q3. Is GPT-4O Mini API integration difficult to implement?

A. No, GPT-4O Mini API integration is designed to be straightforward. The API comes with comprehensive documentation and support, making it easy for developers to integrate and customize according to their specific needs.

Q4. What kind of applications can benefit from GPT-4O Mini API integration?

A. A wide range of applications can benefit from GPT-4O Mini API integration, including customer service bots, virtual assistants, content creation tools, and any app requiring advanced natural language understanding and generation capabilities.

Q5. What are the costs associated with GPT-4O Mini API integration?

A. The costs of GPT-4O Mini API integration can vary based on usage and the specific pricing plan chosen. It’s advisable to review the pricing details on the official website to select a plan that fits your app’s needs and budget.